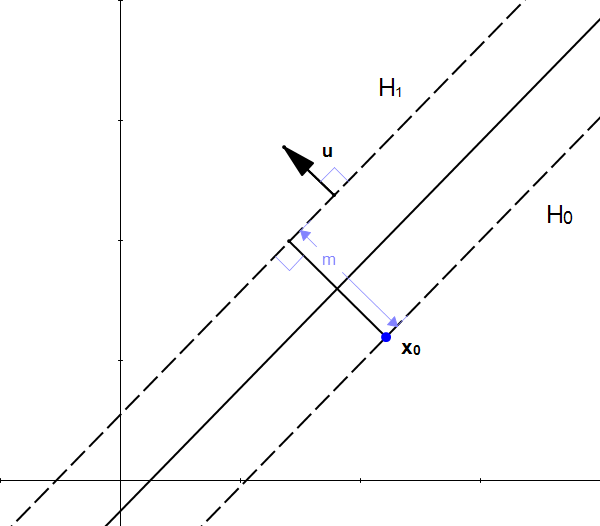

Now, with all the above information we will try to find $\|x_+ - x_-\|_2$ which is the geometric margin. Now, the distance between $x_+$ and $x_-$ will be the shortest when $x_+ - x_-$ is perpendicular to the hyperplane. Let $x_+$ be the point on the positive example be a point such that $w^Tx_+ + w_0 = 1$ and $x_-$ be the point on the negative example be a point such that $w^Tx_- + w_0 = -1$. The maximal margin hyperplane is the hyperplane that lies the farthest (in perpen. import matplotlib.pyplot as plt from sklearn import svm from sklearn.datasets import makeblobs from sklearn.inspection import DecisionBoundaryDisplay. Plot the maximum margin separating hyperplane within a two-class separable dataset using a Support Vector Machine classifier with linear kernel. However, let us consider the extreme case when they are closest to the hyperplane that is, the functional margin for the shortest points are exactly equal to 1. In this case, there are actually infinitely many separating hyperplanes. SVM: Maximum margin separating hyperplane. Now, the points that have the shortest distance as required above can have functional margin greater than equal to 1. Possible hyperplanes To separate the two classes of data points, there are many possible hyperplanes that could be chosen. An SVM classifies data by finding the best hyperplane that separates all. Given a set of points of two types in dimensional place SVM generates a. Geometric margin is the shortest distance between points in the positive examples and points in the negative examples. The objective of the support vector machine algorithm is to find a hyperplane in an N-dimensional space(N the number of features) that distinctly classifies the data points. You can use a support vector machine (SVM) when your data has exactly two classes. Support Vector Machine (SVM) is a supervised binary classification algorithm.

0 kommentar(er)

0 kommentar(er)